[ICLR 2019] ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware

2020. 7. 28. 02:41ㆍ카테고리 없음

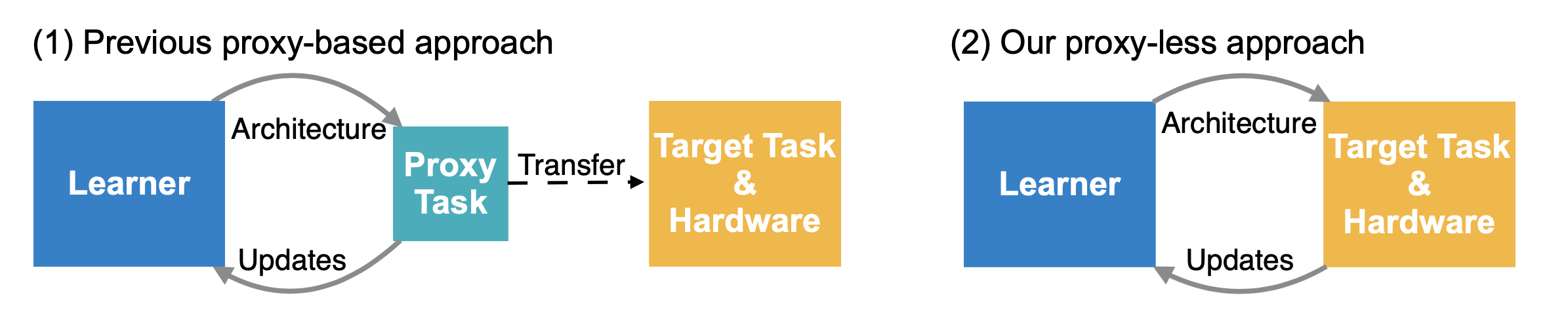

- Directly applying NAS to a large scale task (e.g. ImageNet) is computationally expensive or impossible.

- To solve this problem, Some works proposed to search for building blocks on proxy tasks, such as training for fewer epochs, starting with a smaller dataset (e.g. CIFAR-10), or learning with fewer blocks. $\to$ Cannot guarantee to be optimal on the target task.

- ProxylessNAS directly learns the architectures on the target task and HW without proxy.

Method

1. Construction of Over-Parameterized Network

Notation

- $N(e, ..., e_n)$: Neural networks composed with $e_i$ which represents a certain edge in the DAG.

- $O = \{o_i\}$: the set of $N$ candidate primitive operations (e.g. conv, pooling, ...)

- To construct the over-parameterized network that includes any architecture in the search space, each edge is set to be a mixed operation ($m_O$) with $N$ parallel paths as shown in Figure 2.

- Given input $x$, the output of a mixed operation $m_O$ is defined based on the outputs of its $N$ paths.

- By the following equation, the output feature maps of all $N$ paths are calculated and stored in the memory, while training a compact model only involves one path.

- Due to the existence of $N$ different paths, it requires $N$ times memory compared to a compact model $to$ exceeds memory limits.

- The problem can be solved with the binarized path.

2. Learning Binarized Path

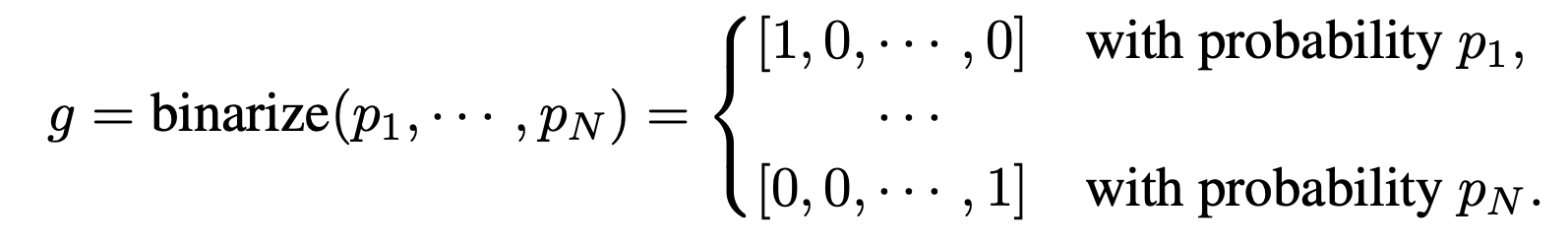

- To save memory, only one path is kept when training the over-parameterized network.

- For each $N$ different path, only one path of activation is active in memory at run-time by using binary gates $g$.

- The output of the mixed operation is given as:

- When training weights parameters, the architecture parameters are frozen, and binary gates are stochastically sampled according to the above equation for each input batch.

- Then the weight parameters of active paths are updated via standard gradient descent.

- When training architecture parameters, the weight parameters are frozen, then binary gates are reset, and architecture parameters are updated based on the validation set.

- These two steps are performed alternatively.

- Once the training of architecture parameters is finished, the path with the highest path weight is kept and the other redundant paths are pruned.